Secrets for Achieving Rapid Success in End-to-End Test Automation

Has your team implemented test automation? I believe many of you have taken up this challenge, given the wide range of both paid and free test automation tools and services available. However, I'm afraid that some of you might have given up before even putting those tests into full operation on a daily basis. In this article, I will provide you with tips to ensure you don't encounter common pitfalls and offer insights specifically focused on E2E (end-to-end) testing.

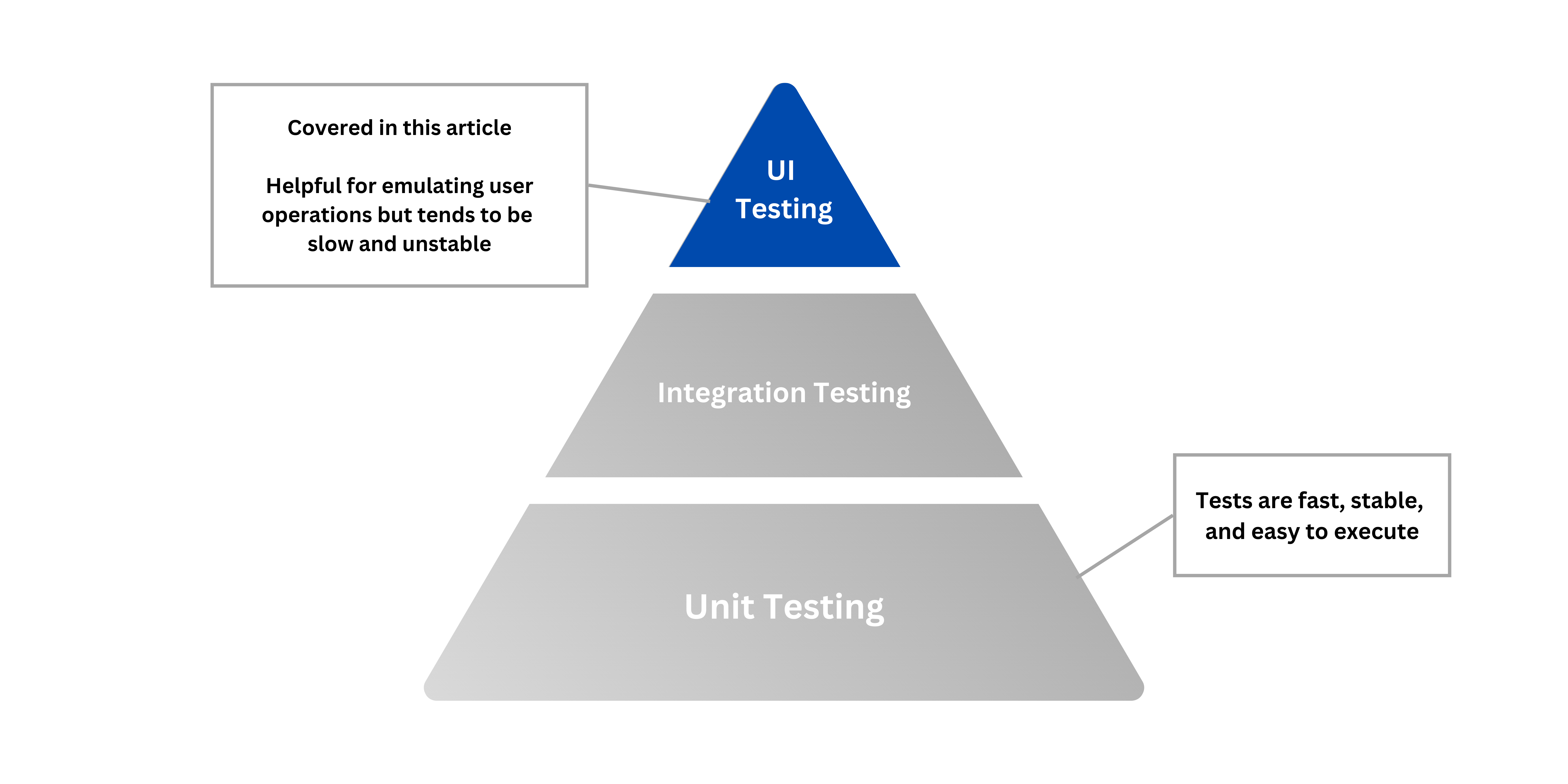

Automated testing can be categorized into three groups, as outlined in The Way of the Web Tester: A Beginner's Guide to Automating Tests by Jonathan Rasmusson:

- UI testing: Simulates user interface operations, replicating end-user actions.

- Integration testing: Verifies the connectivity of core services without using the user interface.

- Unit testing: Conducts quick tests on small program units at the code level.

.png?width=960&height=480&name=Covered%20in%20this%20article%20Tests%20emulate%20user%20operations%20but%20tend%20to%20be%20slow%20and%20unstable%20(2).png)

In this article, the term "E2E testing" refers to UI tests as described above. The term "end-to-end" implies conducting external tests to ensure the entire system functions seamlessly without any issues.

Common Pitfalls in E2E Automated Testing

There are several reasons why users may give in to challenges before fully implementing automated testing:

- The selected tools were inadequate or not a good fit for the use case.

- Insufficient time and resources for test creation.

- Unstable test results leading to a loss of confidence in their reliability.

- Tests become obsolete due to changes in specifications.

The last possibility is likely the most prevalent since automated testing is an ongoing project. It is crucial to keep tests updated according to the application under testing. Otherwise, test failures will no longer signify technical issues, rendering the tests useless. This challenge is unique to E2E testing, unlike unit tests that can be written during the development phase and naturally maintained. To prevent this from happening and establish a habit of "living" automated testing to identify defects and stabilize the entire organization, two key factors come into play: goal setting and implementing automation step-by-step.

The Goal of Automation

It is easy to fall into the trap of viewing the purpose of automating testing as simply reducing workload and costs. Automating tests for daily operations requires significant time and effort. When comparing the "execution time of manual tests" to the "creation time of automated tests," the disparity can be overwhelming. Should we then give up on automation because it takes years to break even?

I believe the true purpose of automated tests is to enhance productivity in both development and QA by detecting defects and operational issues. Automated tests can be executed continuously without concerns about human resources. By running them every day in the development environment, we can identify problems much earlier in the development phase.

Early detection and easy correction are beneficial to developers. Identifying defects can be time-consuming, especially with frequent changes during the development phase. Correcting these defects can be a painstaking process, requiring developers to recall specific changes. In unfortunate cases where the issue involves other developers, additional time is needed for investigation. Conversely, a clean development and testing environment can be established by running tests daily, enabling the entire organization to maintain productivity.

Automated testing also benefits the QA division. If issues are only identified during the testing period after the development phase, the launch may be delayed to allocate extra time for issue resolution. By running automated tests daily during the development phase, the QA division can start the testing period with a product of decent quality. In addition, by continuing to run tests also during the testing period, we can spot regressions (when certain parts stop functioning properly).

The Key to Success: Establish a Daily Test Execution Routine

As mentioned earlier, the routine of running tests every day leads to a boost in development and QA productivity. This is precisely how we stabilize automated testing in our organization. Daily test execution yields several desirable outcomes:

- Team members appreciate the daily tests, as checking the results becomes part of their routine.

- With a higher number of daily executions compared to weekly or monthly tests, the automation investment provides better value for money.

- The team's mindset shifts from "let's rely on manual processes" to "let's focus on maintenance."

- When the entire team acknowledges the importance of automated testing maintenance, newcomers will carry on the spirit, even if initial members leave the organization.

On the contrary, if tests are only run before official launches, the issues listed at the beginning of this article will still occur despite the presence of automated tests.

- If all changes during the development period are tested together, it becomes extremely challenging to differentiate between an automated testing issue and an actual defect when an error occurs.

- Isolating errors becomes difficult, leading to overlooked issues that remain unresolved after testing, rendering the created tests meaningless.

.png?width=6912&height=3456&name=Covered%20in%20this%20article%20Tests%20emulate%20user%20operations%20but%20tend%20to%20be%20slow%20and%20unstable%20(6).png)

I am a developer of an automated testing platform called MagicPod and many of my clients who have successfully adopted automated testing for a considerable period emphasize the merit of "boosting development and QA productivity" and insist on running tests every day. MagicPod has been running automated tests daily since its inception, and through continuous communication with our clients, I have come to realize the importance of this practice.

Start Small and Manageable

So, what is the first step when embarking on automated testing (after selecting the tool)? It is to establish a flow to:

- Obtain the latest version of the system under development.

- Execute a test, any test, every single day.

- Notify the entire team about the results.

It is completely acceptable to begin with a single test, as the primary objective is to identify crucial actions that serve as the basis for automation. Before increasing the number of test cases, ensure that each case is ready for repeated execution. This establishes the application's foundation for your team and enables easy verification of the functionality of your created tests.

While one case may not reveal all issues, it can detect critical problems in the development phase (e.g., failures in important screen transitions), which would impede QA work.

Is that all, you may ask? The most crucial factor for successful adoption of automated testing within your team is the visible output. Even if it is minimal, the team gains a much deeper understanding of taking over from senior members and other team members. They develop a more concrete image of authentic applications based on the mechanism of automated testing and the categories of notifications they receive.

Getting Ready for Launch

Once the concrete foundation for daily tests is established, you are ready to expand into large-scale automated testing. Follow these steps:

- Begin with one case per day initially.

- Gradually increase the number of cases while closely observing stability.

- Cover a wide range of general and straightforward system functions.

- Establish a usage period with a constant number of cases.

- Expand test cases while considering options beyond E2E

Since we covered Step 1 in the last section, let's delve deeper into steps 2 to 5.

2. Gradually increase the number of cases while closely observing stability.

By running a test every day, you can confront a series of problems, such as testing stability. Address these issues one by one while gradually increasing the number of tests. Although it might seem more efficient to spend a significant amount of time creating multiple tests at once and repeating them in subsequent days, that is an illusion. Increasing the number of tests without verifying their maintainability will render them untouched and unmanageable. Therefore, focus on verification incrementally before aiming for a sudden expansion.

At this stage, it is advisable to develop mechanisms and regulations for sustainable operation. For example:

- Establish regulations (naming rules, common units, etc.) for implementing test cases.

- Focus on maintaining test data to ensure consistent results even after repeated executions.

- Build a mechanism that allows date-dependent tests to run successfully, even when the date changes.

3. Cover a wide range of general and straightforward system functions.

Start by automating checks to verify the smooth functioning of basic features and screens throughout the system. Instead of automating detailed tests for individual functions with various conditions, prioritize and automate a wide range of general and straightforward functions and screens. Avoid simply automating existing manual tests from top to bottom. At this stage, prioritize automating essential test cases that can detect major functional regressions.

4. Establish a usage period with a constant number of cases

Before considering case expansions, refrain from making any changes for about 1-2 months to assess maintenance and the daily operations of your team. The main tasks required during operation are:

- Checking the daily execution results and isolating errors (whether they are system defects, incomplete test cases, or other external factors).

- Reporting in case of defects.

- If the test case is defective, correct it.

Make sure that the above tasks can be performed without significantly burdening the team. E2E testing is generally more prone to unstable results than unit testing, but if there are too many instabilities, it will take time to isolate them and increase the burden on operations. It is necessary to make judgments such as devising the implementation of test cases or excluding them from automated testing if they are unavoidably difficult.

5. Expand test cases while considering options beyond E2E

Once you are confident in your maintenance capabilities, you can begin automating more complex test cases, such as analyzing error messages and checking feasibility in special environments. However, it is unnecessary to execute all E2E tests, as it can be time-consuming and ultimately a waste of resources. Consider unit tests and API tests as viable alternatives. The key is to establish a virtuous cycle that provides prompt feedback.

Summary

The two essential elements for rapid and successful implementation of E2E test automation are:

- Goal setting: Boost development and QA productivity by detecting issues early.

- Step-by-step procedures: Establish an environment for daily test execution before expanding the number of tests.

Let's strive for sustainable automated testing by steadily running tests!

Read the next part of this article here.

MagicPod is a no-code AI-driven test automation platform for testing mobile and web applications designed to speed up release cycles. Unlike traditional "record & playback" tools, MagicPod uses an AI self-healing mechanism. This means your test scripts are automatically updated when the application's UI changes, significantly reducing maintenance overhead and helping teams focus on development.