Mastering Daily Automated E2E Testing: Essential Tips for Efficiency

In this article, we'll delve deeper into common problems that arise when executing daily automated E2E tests, and provide solutions to overcome them. We'll explore issues such as spending excessive time on analyzing failed tests, dealing with flaky tests, and managing lengthy execution times. We will also discuss the concept of test case independence as the key to address these these challenges effectively.

Note: This is a follow-up article for this post.

Common Problems in Operation

Let's take a look at some common problems that come with automated testing:

-

Spending a significant amount of time on analyzing failed tests: Analyzing the results of automated tests can be time-consuming, as different actions need to be taken to address various types of failures. It is important to determine whether the failures are due to defects in the application, mistakes in the test cases, or environmental factors. If this analysis process becomes too lengthy, automated testing can become burdensome and demotivating, hindering the team's ability to effectively utilize the tests.

-

Dealing with flaky tests (tests with contradictory results): Flaky tests, which yield inconsistent results despite following the same procedures, are a common problem. The analysis of failed tests mentioned earlier becomes even more time-consuming when dealing with flaky tests. These inconsistencies may stem from factors such as using incompatible browser versions or minor delays in the application's response. In some cases, even external factors like running a virus check on the computer during testing can lead to failures.

-

Lengthy execution time: End-to-end (E2E) tests typically take longer to execute compared to unit tests. It is crucial not to create too many tests simultaneously, as this can lead to prolonged execution times. Following the test pyramid principle and focusing on a few core tests is advisable. Overloading the test suite can result in tests taking several hours to complete, delaying feedback and hindering maintenance efforts, eventually rendering the tests ineffective.

Unfortunately, there are no silver bullet solutions to address all these problems. However, the key phrase to remember is "test case independence."

What is Independence?

Let's consider the well-known "FIRST principles" as guidelines for great unit tests: F stands for "fast," I for "isolated/independent," R for "repeatable," S for "self-validating," and T for "timely." While the prerequisites for unit tests and E2E tests differ, the principles of "independence" and "repeatability" are crucial for both. The fact that the tests are independent and repeatable means that they can be run within just one case, without having to be run in the same order as in the daily run, and that the results will be the same no matter how many times they are run.

Achieving test case independence offers several benefits:

- Quick and independent re-execution for failures: Independent test cases make it easier to rerun failed tests. This reduces the time spent on analysis and bug fixing, transforming the process from a time-consuming ordeal to a more efficient workflow.

- A simple solution for flakiness: Independent test cases facilitate easy re-execution, enabling efficient identification of flaky tests. Flakiness often arises from tests that cannot be repeated reliably, such as when data created in a previous test is not properly cleared. By ensuring test case independence, flakiness can be reduced, allowing the focus to remain on productive work.

- Shortened execution time: Independent test cases enable parallel execution in most cases. This reduces the overall execution time, enabling timely feedback for developers.

With these benefits in mind, achieving test case independence becomes a feasible and rewarding endeavor.

Concrete Actions for Test Independence

To achieve test case independence, consider the following strategies (I will be using MagicPod, a test automation tool developed by my company, to illustrate):

1) Never Rely on the Initial Status of the Application Under Test

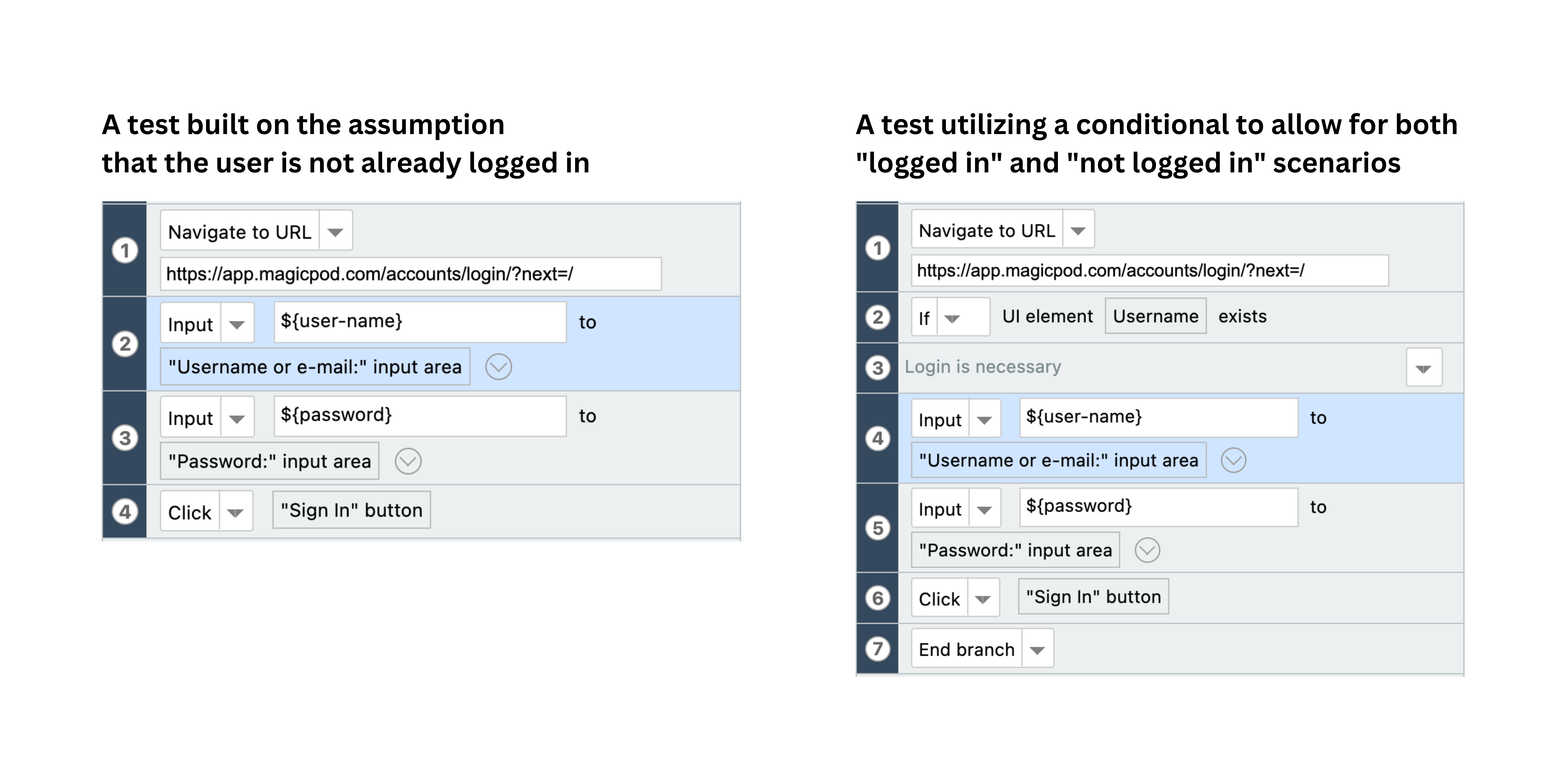

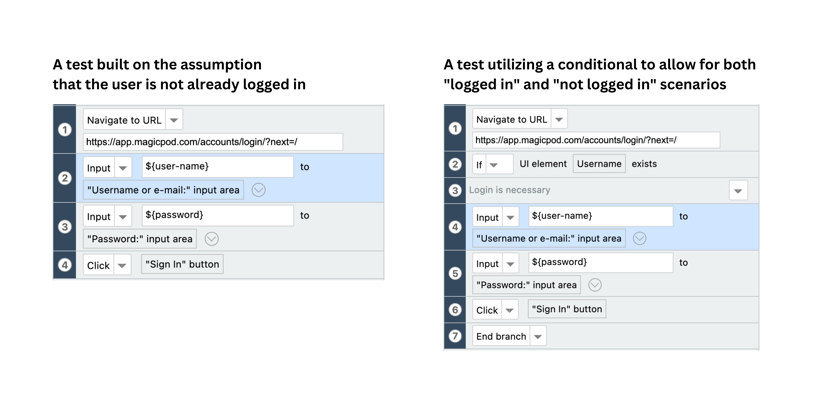

Avoid relying on the specific status of the application when starting test operations. For example, differentiating between being logged in or not, or having completed a tutorial or not, can impact test outcomes. To create independent tests, utilize branch conditions to create tests for numerous scenarios (Below, I've created a test case using a conditional statement, that "checks" whether the user is logged in or not)

2) Separate Data or Accounts From Procedures

Especially for parallel execution, it is essential to separate data across different test cases. If you share data among multiple test cases, making a change in one case can cause other cases to fail, making maintenance very difficult. It may not be a significant issue for cases that only involve display confirmation, but in most tests, you will likely make some changes to the data and verify the results. Therefore, it is crucial to separate the data for each test case. Additionally, depending on the system under test, many systems have different data for each account (user), so separating them by account can be beneficial. However, separating them too much can make the management of authentication information complex.

In my team, we handle it as follows:

- General operation tests that do not depend on account characteristics: Use general-purpose test accounts.

- Tests that involve external ID integration or specific account features (such as enterprise users): Use dedicated accounts for each test case.

3) Create Data Within Test Cases

Instead of relying on predefined data, create data dynamically within the test cases themselves. By doing so, even if there are data issues or defects in the previous execution, you can start the next execution in a clean state.

The idea of generating data every time may seem time-consuming. However, if the application under test has an API for data registration, you can quickly prepare the data.

To illustrate, an E2E test automation tool usually has a feature to execute any Web API independently of browser operations. This functionality simplifies operations that would otherwise be difficult or time-consuming to perform solely through on-screen interactions.

4) Utilize Variables

Utilize variables to handle dynamic data or content that may vary across test runs, such as dates and content like news that changes daily. By setting up variables, such as a date variable for future dates in hotel reservation tests or a title variable for comparing headlines on different pages, you can maintain flexibility and adaptability in your tests.

For example, let's say you need to input future dates every time you test a hotel reservation page. You can prepare a variable (let's call it "DATE") to store the dates and always input a date that is two days ahead, as shown in the figure below. By doing this, you can input the appropriate date every time you execute the test.

Furthermore, let's say you want to verify that the heading on one page matches the heading on a different page. You can store the heading on the first page as a variable called "TITLE" and compare it with the headline on the second page.

Although it may be possible to make an effort to fix the dates or the content of news in the test environment, being too fixated on them can potentially lead to overlooking defects that occur with specific data. In areas where flexibility is possible, it is advisable to actively use variables to conduct tests more dynamically.

Although it may be possible to make an effort to fix the dates or the content of news in the test environment, being too fixated on them can potentially lead to overlooking defects that occur with specific data. In areas where flexibility is possible, it is advisable to actively use variables to conduct tests more dynamically.

5) Execute Tests Under Consistent Conditions

This point takes a slightly different approach, focusing more on "repeatability" than "independence." In E2E automated testing, results can easily vary due to even minor external factors. Therefore, it is important to execute tests in a clean environment where external factors are eliminated.

Recently, there are many services available that allow testing on specified browsers and devices in a cloud environment. Setting up the execution environment can be quite time-consuming, so it is advisable to rely on such services to stabilize the environment and focus on the test content.

TL;DR

In this article, we covered the importance of test case independence to achieve effective and efficient automated testing. By focusing on independence, we can overcome the challenges of time-consuming test execution, analysis of results, and dealing with flaky tests. While certain test tools may assist in resolving these issues, the responsibility ultimately lies with test engineers to design workflows that prioritize independence and proper data management.

In my next article, I'd like to share tips on test case creation to further optimize automated testing and save time. Stay tuned!

MagicPod is a no-code AI-driven test automation platform for testing mobile and web applications designed to speed up release cycles. Unlike traditional "record & playback" tools, MagicPod uses an AI self-healing mechanism. This means your test scripts are automatically updated when the application's UI changes, significantly reducing maintenance overhead and helping teams focus on development.